The API provides several features such as gender, age, and emotion estimation. You can use all (or a subset) of them for your images to extract knowledge from the people on your images. The API allows you to use the features of your choice only, which is the preferred way for performance reasons. The maximum allowed image size is 4 MB.

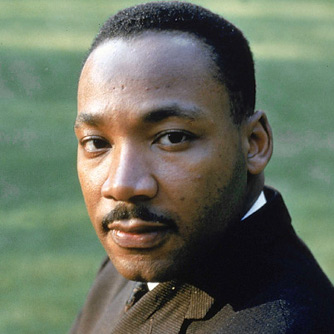

Let's start by using Rosto.io API to figure out the gender of the person in the following picture. The example calls below will send the image as a file.

curl -H 'Authorization: Token token="TOKEN"' -F 'features=gender' -F "image=@first-example-gender.jpg" https://www.rosto.io/api/v1/featuresimport json

import requests

with open('first-example-gender.jpg', "rb") as image_file:

response = requests.post('https://www.rosto.io/api/v1/features',

headers={'Authorization': 'Token token=TOKEN'}

data={'features': 'gender'},

files={'image': image_file})

print json.loads(response.text)

require 'json'

require 'faraday'

conn = Faraday.new url: 'https://www.rosto.io' do |faraday|

faraday.request :multipart

faraday.adapter Faraday.default_adapter

end

response = conn.post do |req|

req.headers['Authorization'] = "Token token=TOKEN"

req.url '/api/v1/features'

req.body = {

features: 'gender',

image: Faraday::UploadIO.new('first-example-gender.jpg', 'image/jpeg')

}

end

puts JSON.parse(response.body)

Regardless the language above you have tried out, the result should be the following

{

"faces": [

{

"gender": {

"classes": {

"man": 0.98,

"woman": 0.02

},

"probability": 0.98,

"value": "man"

}

}

]

}The JSON result will always have a faces array containing the result for each of the features and for each of the faces present in the image. In this case you have requested the gender feature and since there is only one face in the picture the content will be a single nested element. As expected the gender "value" for the person in this image is "man".

Besides sending image as a file, you can also send a public URL of the image. Under the hood the image will be downloaded and the features will be processed.

curl -H 'Authorization: Token token="TOKEN"' -d 'features=gender,age' -d 'url=https://www.rosto.io/images/first-example-gender.jpg' https://www.rosto.io/api/v1/featuresimport json

import requests

response = requests.post('https://www.rosto.io/api/v1/features',

headers={'Authorization': 'Token token=TOKEN'},

data={'features': 'gender,age',

'url': 'https://www.rosto.io/images/first-example-gender.jpg'})

print json.loads(response.text)require 'json'

require 'faraday'

conn = Faraday.new url: 'https://www.rosto.io' do |faraday|

faraday.request :url_encoded

faraday.adapter Faraday.default_adapter

end

response = conn.post do |req|

req.headers['Authorization'] = "Token token=TOKEN"

req.url '/api/v1/features'

req.body = {

features: 'gender,age',

url: 'https://www.rosto.io/images/first-example-gender.jpg'

}

end

puts JSON.parse(response.body)

The sole difference from the first example to other features is the array of features given as the input, you can pass as many as you need in the featuresarray. The following are the available features:

It interprets input face and predicts the age of the person in the image. Given the following image

Below the response for the given image

{

"faces": [

{

"age": 10

}

]

}Face bounds return a rectangle which delimits the face area. The result will have left, top, right and bottom coordinates.

Below the response for the given image

{

"faces": [

{

"bounds": {

"bottom": 106,

"left": 157,

"right": 193,

"top": 70

}

}

]

}It interprets input face and return one of the emotions among angry, disgust, fear, happy, sad, surprise, neutral. Given the following image

Below the response for the given image

{

"faces": [

{

"emotion": {

"classes": {

"angry": 0.01,

"disgust": 0.0,

"fear": 0.0,

"happy": 0.98,

"neutral": 0.0,

"sad": 0.0,

"surprise": 0.0

},

"probability": 0.98,

"value": "happy"

}

}

]

}It interprets input face and return one of the ethnicities among asian, black, indian, white. Given the following image

Below the response for the given image

{

"faces": [

{

"ethnicity": {

"classes": {

"asian": 0.0,

"black": 0.0,

"indian": 0.0,

"white": 1.0

},

"probability": 1.0,

"value": "white"

}

},

{

"ethnicity": {

"classes": {

"asian": 0.0,

"black": 0.0,

"indian": 0.01,

"white": 0.99

},

"probability": 0.99,

"value": "white"

}

}

]

}It interprets input face and return one of the glasses usage among not using glasses, swimming goggles, and using glasses

Below the response for the given image

{

"faces": [

{

"glasses": {

"classes": {

"not-using-glasses": 0.0,

"swimming-goggles": 0.12,

"using-glasses": 0.88

},

"probability": 0.88,

"value": "using-glasses"

}

}

]

}It returns a set of coordinates (x, y) around the hair area, its hex color and the closest color name. That feature is in beta stage, it will work better with squared portrait-like images.

Below the response for the given image

{

"faces": [

{

"hair": {

"color": {

"hex": "#121117",

"name": "black"

},

"polygons": [

[

{

"x": 142,

"y": 3

},

{

"x": 140,

"y": 5

},

{

"x": 135,

"y": 5

},

{

"x": 134,

"y": 6

},

{

"x": 133,

"y": 6

},

{

"x": 131,

"y": 8

},

{

"x": 128,

"y": 8

},

{

"x": 128,

"y": 9

},

{

"x": 127,

"y": 10

},

{

"x": 121,

"y": 10

},

{

"x": 118,

"y": 13

},

{

"x": 116,

"y": 13

},

{

"x": 112,

"y": 17

},

{

"x": 112,

"y": 18

},

{

"x": 109,

"y": 21

},

{

"x": 108,

"y": 21

},

{

"x": 107,

"y": 22

},

{

"x": 107,

"y": 24

},

{

"x": 105,

"y": 26

},

{

"x": 104,

"y": 26

},

{

"x": 102,

"y": 28

},

{

"x": 102,

"y": 30

},

{

"x": 98,

"y": 34

},

{

"x": 97,

"y": 34

},

{

"x": 97,

"y": 35

},

{

"x": 95,

"y": 37

},

{

"x": 95,

"y": 38

},

{

"x": 94,

"y": 39

},

{

"x": 94,

"y": 40

},

{

"x": 93,

"y": 41

},

{

"x": 93,

"y": 42

},

{

"x": 92,

"y": 43

},

{

"x": 92,

"y": 48

},

{

"x": 95,

"y": 48

},

{

"x": 102,

"y": 41

},

{

"x": 105,

"y": 41

},

{

"x": 108,

"y": 38

},

{

"x": 129,

"y": 38

},

{

"x": 131,

"y": 36

},

{

"x": 139,

"y": 36

},

{

"x": 142,

"y": 33

},

{

"x": 144,

"y": 33

},

{

"x": 147,

"y": 30

},

{

"x": 170,

"y": 30

},

{

"x": 171,

"y": 31

},

{

"x": 172,

"y": 31

},

{

"x": 173,

"y": 32

},

{

"x": 174,

"y": 32

},

{

"x": 175,

"y": 33

},

{

"x": 189,

"y": 33

},

{

"x": 190,

"y": 34

},

{

"x": 190,

"y": 35

},

{

"x": 191,

"y": 35

},

{

"x": 192,

"y": 36

},

{

"x": 194,

"y": 36

},

{

"x": 196,

"y": 38

},

{

"x": 199,

"y": 38

},

{

"x": 204,

"y": 43

},

{

"x": 206,

"y": 43

},

{

"x": 207,

"y": 44

},

{

"x": 208,

"y": 44

},

{

"x": 209,

"y": 45

},

{

"x": 209,

"y": 46

},

{

"x": 210,

"y": 46

},

{

"x": 211,

"y": 47

},

{

"x": 211,

"y": 48

},

{

"x": 212,

"y": 49

},

{

"x": 212,

"y": 50

},

{

"x": 214,

"y": 52

},

{

"x": 214,

"y": 53

},

{

"x": 216,

"y": 55

},

{

"x": 216,

"y": 57

},

{

"x": 217,

"y": 58

},

{

"x": 217,

"y": 68

},

{

"x": 219,

"y": 70

},

{

"x": 219,

"y": 71

},

{

"x": 222,

"y": 74

},

{

"x": 222,

"y": 75

},

{

"x": 223,

"y": 76

},

{

"x": 223,

"y": 77

},

{

"x": 224,

"y": 78

},

{

"x": 224,

"y": 79

},

{

"x": 225,

"y": 80

},

{

"x": 230,

"y": 75

},

{

"x": 231,

"y": 75

},

{

"x": 232,

"y": 74

},

{

"x": 233,

"y": 74

},

{

"x": 235,

"y": 72

},

{

"x": 236,

"y": 72

},

{

"x": 237,

"y": 73

},

{

"x": 237,

"y": 74

},

{

"x": 238,

"y": 75

},

{

"x": 239,

"y": 75

},

{

"x": 241,

"y": 77

},

{

"x": 241,

"y": 76

},

{

"x": 242,

"y": 75

},

{

"x": 243,

"y": 75

},

{

"x": 244,

"y": 74

},

{

"x": 245,

"y": 74

},

{

"x": 245,

"y": 73

},

{

"x": 246,

"y": 72

},

{

"x": 248,

"y": 74

},

{

"x": 250,

"y": 74

},

{

"x": 251,

"y": 75

},

{

"x": 252,

"y": 74

},

{

"x": 252,

"y": 53

},

{

"x": 247,

"y": 48

},

{

"x": 247,

"y": 42

},

{

"x": 244,

"y": 39

},

{

"x": 244,

"y": 37

},

{

"x": 230,

"y": 23

},

{

"x": 228,

"y": 23

},

{

"x": 226,

"y": 21

},

{

"x": 225,

"y": 21

},

{

"x": 222,

"y": 18

},

{

"x": 220,

"y": 18

},

{

"x": 215,

"y": 13

},

{

"x": 211,

"y": 13

},

{

"x": 210,

"y": 12

},

{

"x": 209,

"y": 12

},

{

"x": 207,

"y": 10

},

{

"x": 206,

"y": 10

},

{

"x": 205,

"y": 9

},

{

"x": 205,

"y": 8

},

{

"x": 202,

"y": 8

},

{

"x": 199,

"y": 5

},

{

"x": 194,

"y": 5

},

{

"x": 192,

"y": 3

},

{

"x": 143,

"y": 3

}

]

]

}

}

]

}Landmarks are the key areas present on faces such as nose, jaw, lips, left and right eyes, etc. The API provides 71 coordinates (x, y) on the face. Consider the following images. On the left side the original image. On the right image all landmark points containing jaw (green), left (and center) eye (red), right (and center) eye (red), left eyebrow (blue), right eyebrow (blue), nose (white), under lip (yellow), upper lip (purple), and mouth center (black).

Below the response for the given image

{

"faces": [

{

"landmarks": {

"jaw": [

{

"x": 58,

"y": 59

},

{

"x": 59,

"y": 68

},

{

"x": 61,

"y": 78

},

{

"x": 64,

"y": 88

},

{

"x": 67,

"y": 98

},

{

"x": 72,

"y": 107

},

{

"x": 79,

"y": 115

},

{

"x": 87,

"y": 122

},

{

"x": 97,

"y": 124

},

{

"x": 108,

"y": 121

},

{

"x": 119,

"y": 112

},

{

"x": 128,

"y": 103

},

{

"x": 135,

"y": 93

},

{

"x": 139,

"y": 82

},

{

"x": 140,

"y": 70

},

{

"x": 140,

"y": 58

},

{

"x": 139,

"y": 47

}

],

"left_eye": [

{

"x": 100,

"y": 54

},

{

"x": 105,

"y": 49

},

{

"x": 111,

"y": 48

},

{

"x": 116,

"y": 51

},

{

"x": 112,

"y": 54

},

{

"x": 106,

"y": 54

}

],

"left_eye_center": {

"x": 108,

"y": 51

},

"left_eyebrow": [

{

"x": 91,

"y": 45

},

{

"x": 98,

"y": 41

},

{

"x": 107,

"y": 39

},

{

"x": 116,

"y": 39

},

{

"x": 124,

"y": 43

}

],

"mouth_center": {

"x": 91,

"y": 99

},

"nose": [

{

"x": 86,

"y": 54

},

{

"x": 86,

"y": 62

},

{

"x": 85,

"y": 70

},

{

"x": 85,

"y": 78

},

{

"x": 82,

"y": 83

},

{

"x": 85,

"y": 85

},

{

"x": 88,

"y": 86

},

{

"x": 93,

"y": 84

},

{

"x": 97,

"y": 82

}

],

"right_eye": [

{

"x": 65,

"y": 57

},

{

"x": 69,

"y": 53

},

{

"x": 74,

"y": 53

},

{

"x": 80,

"y": 57

},

{

"x": 74,

"y": 58

},

{

"x": 69,

"y": 59

}

],

"right_eye_center": {

"x": 71,

"y": 55

},

"right_eyebrow": [

{

"x": 58,

"y": 52

},

{

"x": 61,

"y": 47

},

{

"x": 67,

"y": 45

},

{

"x": 74,

"y": 45

},

{

"x": 80,

"y": 46

}

],

"under_lip": [

{

"x": 103,

"y": 104

},

{

"x": 97,

"y": 107

},

{

"x": 92,

"y": 108

},

{

"x": 87,

"y": 108

},

{

"x": 82,

"y": 105

},

{

"x": 81,

"y": 100

},

{

"x": 106,

"y": 97

},

{

"x": 96,

"y": 100

},

{

"x": 91,

"y": 101

},

{

"x": 86,

"y": 101

}

],

"upper_lip": [

{

"x": 79,

"y": 100

},

{

"x": 81,

"y": 97

},

{

"x": 86,

"y": 94

},

{

"x": 90,

"y": 96

},

{

"x": 95,

"y": 93

},

{

"x": 102,

"y": 95

},

{

"x": 109,

"y": 97

},

{

"x": 86,

"y": 98

},

{

"x": 91,

"y": 99

},

{

"x": 96,

"y": 97

}

]

}

}

]

}It returns the face's roll (x), pitch (y) and yaw (z) angles in degrees from -90 to 90.

Below the response for each of the given images

{

"faces": [

{

"pose": {

"x_roll": -22,

"y_pitch": -58,

"z_yaw": 24

}

}

]

}{

"faces": [

{

"pose": {

"x_roll": -15,

"y_pitch": -56,

"z_yaw": 16

}

}

]

}{

"faces": [

{

"pose": {

"x_roll": -3,

"y_pitch": -52,

"z_yaw": 4

}

}

]

}It measures face image quality by checking whether it is blurry and by its exposure levels: underexposed, balanced and overexposed.

Below the response for each of the given images

"faces": [

{

"blur": {

"is_blurry": false,

"level": 581,

"threshold": 100

},

"exposure": {

"level": "underexposed"

}

}

]"faces": [

{

"blur": {

"is_blurry": true,

"level": 26,

"threshold": 100

},

"exposure": {

"level": "overexposed"

}

},

{

"blur": {

"is_blurry": true,

"level": 17,

"threshold": 100

},

"exposure": {

"level": "overexposed"

}

}

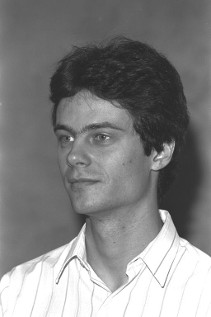

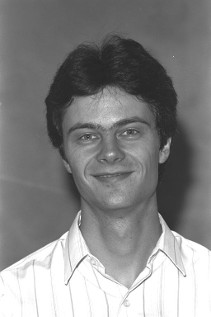

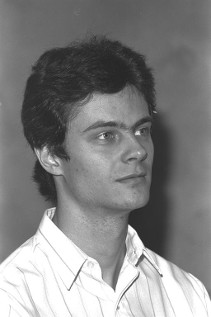

]It interprets input faces and return whether people are smiling or not.

Below the response for the given image

{

"faces": [

{

"smile": {

"is_smiling": true,

"probability": 1.0

}

},

{

"smile": {

"is_smiling": false,

"probability": 0.05

}

},

{

"smile": {

"is_smiling": false,

"probability": 0.03

}

}

]

}